Reviewing and correcting incoming data

The data you collect with SurveyCTO comes in from web forms or mobile devices, it passes through the SurveyCTO server, and then it continues out to other systems via data exports, real-time publishing, or our various API's. You can think of the data as passing through a pipeline, transiting through the SurveyCTO server as part of its journey. For each form, there are two ways that you can configure the SurveyCTO part of the pipeline:

- Pass data immediately through (the default). While you can and always should monitor the quality of your incoming data, SurveyCTO defaults to essentially auto-approving every incoming submission as soon as it comes in, releasing it for immediate publishing or export. Once a submission has been finalized and submitted to the SurveyCTO server, it is passed to you unchanged, however it came in. If you find problems in the data, you correct those problems further along in the pipeline, outside of SurveyCTO (e.g., in Stata).

- Review and correct incoming data, before releasing it downstream. Alternatively, you can enable the review and correction workflow for a form, in which case new submissions can be initially held awaiting review. During review, you can examine submissions, comment on them, and make corrections to the data; when ready, you either approve or reject each submission. Only approved submissions are then released downstream via data exports, real-time publishing, and our various API's.

Review and correction workflow: enabling and configuring

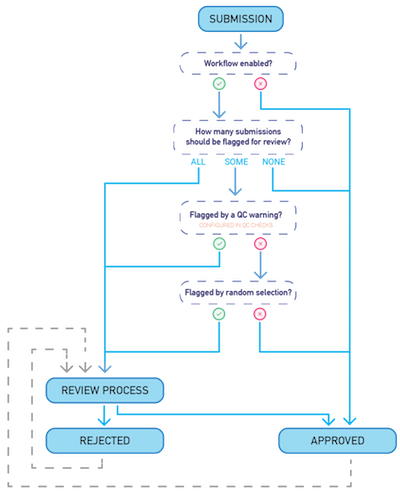

You can use the Review workflow button to enable or disable the review and correction workflow for any form in the Form submissions and dataset data section of the Monitor tab. When the workflow is disabled (the default), submissions pass through the SurveyCTO server unchanged. When enabled, you choose which submissions to flag and hold for review, before releasing them for publishing or export:

- ALL. This is the default and almost certainly the setting you should use for new forms. All incoming submissions will be held awaiting review, only released to downstream systems once they have been approved for export or publishing. When testing or piloting a new form, it's often best to look closely at all incoming data, and it gives you a chance to get used to the review and correction process.

- SOME. When you don't have the resources to closely examine every incoming submission, this is often the best choice for deployed forms. Here, you would hold only some submissions for close inspection, based on quality checks and/or random selection. (Exact options discussed below.)

- NONE. If your QC process requires that you use downstream systems or processes to flag submissions for review, you might default to holding no incoming submissions for review. (See our separate help topic for more on advanced workflow configurations like this.)

Whichever submissions aren't held for review will be auto-approved for export and publishing as soon as they come in. If you choose to hold SOME submissions for review, then you get to decide which submissions to hold; you can select any of the following options:

- Flag incoming submissions based on results of quality checks. If you configure automated quality checks for your form, you can use the results of those checks to flag which submissions warrant additional review. (Choose the "only critical" option if you want to restrict consideration to only those quality checks configured as critical.)

- Flag any submission with a submission-specific QC warning. If you select this option and an incoming submission triggers warnings for Value is too low, Value is too high, or Value is an outlier checks, the submission will be held for review. (Note that outlier tests for incoming submissions will be based on the interquartile range calculated the last time the full set of quality checks were run. This is typically the prior night when checks are configured to run nightly.)

- Flag any submission that is part of a group that triggered a QC warning during the last full evaluation. If you select this option and an incoming submission is part of a group that triggered a Group mean is different or Group distribution is different warning in the prior run (generally, the prior night when all checks are run nightly), the submission will be held for review. So for example, if you have a quality check to monitor the gender distribution by enumerator, and a particular enumerator triggered a warning due to their distribution being different, selecting this option would result in all submissions from that enumerator being held for review.

- Flag any submission that would further contribute to a field-specific QC warning raised during the last full evaluation. If you select this option and an incoming submission has a field value that would worsen an existing Value is too frequent, Mean is too low, or Mean is too high warning, that submission will be flagged for review. For example, if the last full quality-check evaluation triggered a warning for a specific field having too many -888 (don't know) responses and an incoming submission also had a -888 response in that field, the submission would be held for review. Likewise, if a field's mean is already triggering warnings because it is above or below a certain threshold – and an incoming submission has a value also above or below that threshold – then the submission would be held for review.

- Flag a random percentage of submissions. Even if you use quality checks to flag submissions for review, it's always good practice to review some percentage completely at random. After all, your quality checks can't catch everything, and in fact random review can help you to identify where and how to strengthen your automated checks. So here, you can specify what percentage of submissions to flag and hold at random. This percentage only applies to those submissions not already flagged based on quality checks (so, e.g., if 20% of incoming submissions get flagged for quality checks and you configure 10% to be flagged at random, only 10% of the 80% that aren't already flagged would be flagged at random).

Note that you can use Value is too low or Value is too high warnings to trigger review based on arbitrary criteria. For example, say that you wanted to review all submissions for women over 75 years old. You could add a calculate field into your form, with a calculation expression like "if(${gender}='F' and ${age}>75, 1, 0)", and then add a Value is too high quality check on that field, to warn whenever it is greater than 0. You could then configure your workflow to flag incoming submissions for review whenever they have submission-specific QC warnings, which would include any submissions for women over 75 years old.

In addition to choosing which submissions to hold for review, you can also configure a range of other workflow options. For example, you can require that users add comments to explain every correction they make to the data, enable or disable a bulk "approve all" option, and, for encrypted forms, choose whether to encrypt comments or leave them open (so that team members without the private key can see them). You can also allow un-approving or un-rejecting submissions, so that your team can reconsider any submission at any time; before you enable un-approving and un-rejecting, though, you should read about the potential consequences for downstream data systems.

Review and correction workflow: actual review, correction, and classification

When there are submissions awaiting review, you will be alerted on the Monitor tab, and you will find options to review and approve submissions in the Form submissions and dataset data section of that tab. There, you will be able to approve waiting submissions in bulk, review them one-by-one, or explore them in aggregate.

You review submissions in the Data Explorer, where you can safely review even encrypted data. For each submission you review, your tasks are as follows:

- Review. Fundamentally, you want to take a careful look at each submission, to assess its quality. Factors to consider include the logical consistency between different responses, corroborating photos and GPS locations, audio audits, "speed limit" violations, the time spent on individual questions, and more. See the help topic on Collecting high-quality data for a broad discussion of considerations and tools at your disposal.

- Classify. You'll need to classify the initial quality of the submission, how it was on receipt. Your options are: GOOD (no problems found), OKAY (one or more minor problems), POOR (serious and/or many problems), or FAKE (fake or fraudulent responses). Classification is subjective, so just do your best to choose the most reasonable classification for each submission.

- Comment and correct. As you discover problems, you can make corrections to the data. You can also add comments, either to the submission overall, or to individual fields or corrections. When it comes to making corrections, please note the following:

- SurveyCTO allows you to edit the raw data for most fields, and there is no validation to constrain the changes you make – so you have to be careful not to introduce mistakes or invalid data.

- If you encrypt your data, don't worry: even your corrections will be encrypted so that they are only visible to those who have your private key.

- The only correction you can make to a GPS field is to delete/clear a value that you believe to be incorrect; you cannot edit such a field to change one GPS position to another.

- The only correction you can make to a file field – like a photo or audio recording – is to delete/clear values that you believe to be incorrect; you cannot edit such fields to alter the attached files in any way.

- You cannot change the number of responses within a repeat group; so if there are responses for eight household members in a household roster repeat group, for example, then you can change and/or clear the individual responses, but you cannot add or delete entire repeat instances (there will remain eight, as per the original submission).

- Approve or reject. Ultimately, you will need to approve or reject each submission, at which point it exits the review and correction process. Approved submissions are immediately released for export and publishing. (If you configure your workflow to allow un-approving and un-rejecting, this determination is not final. But you should read about the potential consequences for downstream data systems if you enable these options.)

Review and correction workflow: exporting data

When the review and correction workflow is enabled for a form, you still export your data as you normally do. However, please note the following:

- When you download data from the Your data section of the Export tab or export it using SurveyCTO Desktop, the default is to include only approved submissions – but you can also download rejected or awaiting-review submissions if you select the options to include those as well. If you do include submissions that haven't yet been approved, the export file will include an extra review_status column so that you will know the status of each submission.

- Your exported data will include review_comments and review_corrections columns with a history of comments and corrections (and changes in approval status will be automatically included as comments).

- Your exported data will include a review_quality column with the quality classification recorded for each submission (if any).

- Your exported data will include all corrections made to the data – unless you are using SurveyCTO Desktop and have disabled the Apply corrections setting (in order to export the original, un-corrected data).

- If corrections have been made to the data you export, then a yourformid_correction_log.csv file will be automatically generated for you, with a complete history of corrections made to that data. The columns in that correction log are: KEY, field, old value, new value, user, date/time, and comment. If you download data from the Your data section of the Export tab, you'll have the option of downloading that extra corrections log file; if you export data with SurveyCTO Desktop, it will be automatically output alongside your export files.

To learn more about the format of exported data, see Understanding the format of exported data.

Review and correction workflow: publishing data

When the review and correction workflow is enabled for a form that publishes to the cloud or publishes to a server dataset, only approved submissions will publish. This includes submissions that are auto-approved on receipt (unless your workflow is configured to hold all submissions for review), and those submissions that are held for review will publish only if and when they are approved. Also, all corrections will always be applied to published data. (If you allow un-approving, submissions can publish more than once. See the help topic on advanced workflows to learn more.)

Review and correction workflow: automated quality checks

When you configure automated quality checks for a form that uses the review and correction workflow, you have a decision to make: which submissions should be included when the full set of automated quality checks are evaluated?

By default, all submissions are included – including those that have not yet been reviewed and approved. After all, if you're checking for outliers (for example), then it might be useful to know that a new submission you're reviewing is an outlier; the quality-check reports might be one key input into your review process, as you decide which submissions to approve, what corrections to make, etc.

In the Automated quality checks section of the Monitor tab, you can click Options for any form to change which submissions should be included in the checks. You might, for example, choose to exclude rejected submissions, so that they don't throw off the statistical distributions used to assess differences, outliers, and means.